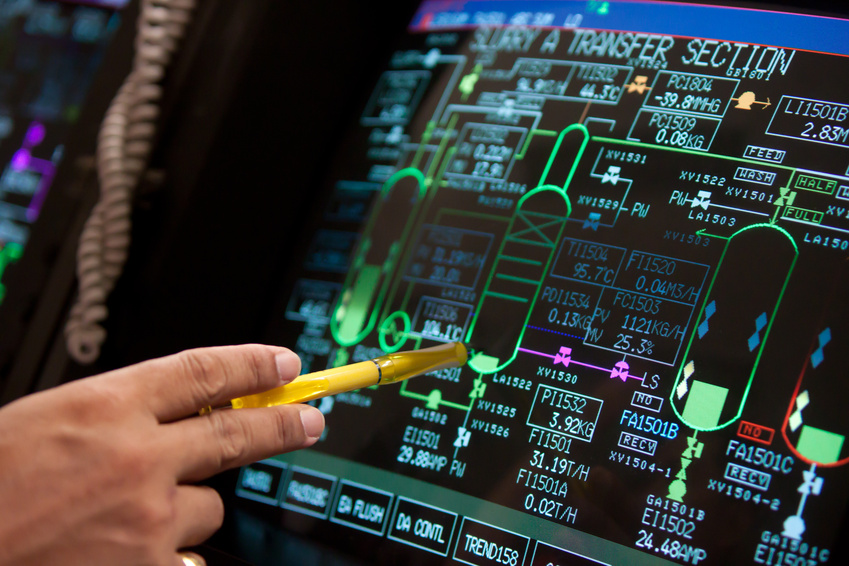

More than six million customers in databases, but only three million real customers? How not to migrate the whole burden of duplicate or multiple customer records to a newly implemented system? How to spot different electricity supply points corresponding in fact to one and the same electricity customer and payer? And finally, how to ensure that you can automatically improve data quality at each stage of the migration of data to a new system on your own? Those were the questions that our Client, a leading electricity supplier, had to ask itself.

Today, the process of data migration from several systems to a single, newly implemented one is rarely a one-time event. It is usually a staggered process, completed in stages. That is also the case with our Client, a leading electricity supplier in Poland. Naturally, this involves additional challenges that a migration team has to face. Spreading this process over many months means, among other things, that one-time data cleansing before loading into the new system is not enough. Such data continues to live on in old, phased-out systems and still requires automatic verification and correction before transfer. For cost but also organizational reasons, it is difficult to use the services of external suppliers to cleanse the data on each occasion.

Drawing upon the rules and algorithms developed during an earlier project of one-time data cleansing for that Client, Sanmargar consultants developed a solution for automatic, regular data cleansing. The solution was developed with the use of Sanmargar DQS technology. It enabled the Client to cleanse data many times on its own at points determined by the migration project schedule, without having to coordinate with the provider or depend on the provider’s availability. What was also gained was the ability to constantly monitor the quality of and correct data migrated to the new system in the earlier stages of the project.

The question of duplicate customers in several old source systems became a separate issue during the migration project. There were duplicates not only among systems but also within each system. The scale of this can be demonstrated by the fact that the number of customer records in databases was twice as big as their real number. For obvious reasons, the transfer of excess records to the new system was to be avoided. There were also some problems with proper identification of customers having multiple electricity supply points and, therefore, recorded as a number of different customers in the system. Such cases should be clearly identified for many business reasons. A customer deduplication module was developed for that purpose as part of the automatic data cleansing and standardization tool. The module made it possible not only to indicate duplicate customers in data sets undergoing deduplication but also assign group IDs in those cases where different electricity supply points corresponded in fact to one and the same customer, all based on the standardized customer data and deduplication rules developed specifically for that purpose. Just like with automatic data cleansing, the deduplication processes were developed in a manner that made it possible for the Client to regularly run them at any time, without any involvement of the provider.